As you all know, Mango is not only meant to create an awesome short film, but also a way to focus and improve Blender development. We already have great new tools, but for a real open source VFX pipeline we need a lot more!

Here are the main categories for development targets we like to work on the next 6 months (in random order):

- Camera and motion tracking

- Photo-realistic rendering – Cycles

- Compositing

- Masking

- Green screen keying

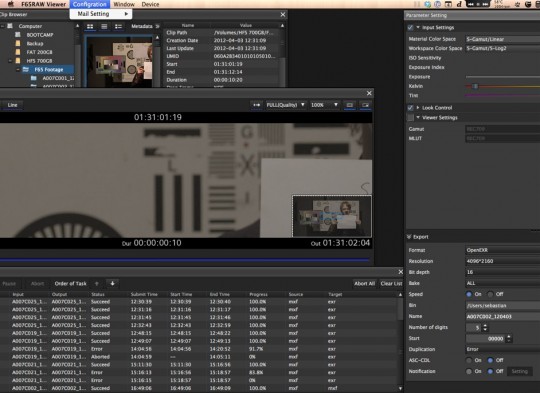

- Color pipeline and Grading tools

- Fire/smoke/volumetrics & explosions

- Fix the Blender deps-graph

- Asset management / Library linking

How far we can bring everything is quite unknown as usual, typically the deadline stress will take over at some moment – forcing developers to just work on what’s essential – and not on what’s nice to have or had been planned. Getting more sponsors and donations will definitely help though! :)

Below is per category notes that have been gathered by me during the VFX roundtable at Blender Conference 2011, and in discussions with other artists like Francois Tarlier, Troy Sobotka, Francesco Paglia, Bartek Skorupa and many others, and some of my own favorites.

I have to warn you. This post is looong. :)

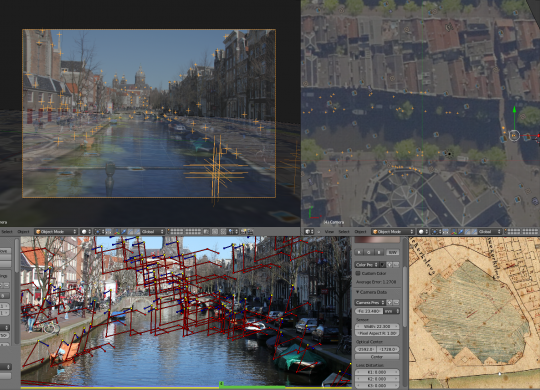

Camera and Motion Tracking

Even though the tracker is aleady totally usable, including object tracking and auto-refinement, there can be some improvements too.

One major feature that we are waiting for to be included is planar tracking. Some of you might know Mocha, a planar tracker widely used in the industry, with which you can do fast and easy masking, digital makeup, patching etc. In a lot of situations you don’t really need a full-fledged 3d track just to manipulate certain areas of your footage. All you need is a tracker that can take into account rotation, translation and scale of the tracked feature in 2d space, for example in order to generate a mask that automatically follows the movements and transformations of the side of a car as it drives by.

Keir Mierle has something in the works that would allow such workflows. Obviously that would be tremendously helpful for masking and rotoscoping as well.

Continue