As you all know, Mango is not only meant to create an awesome short film, but also a way to focus and improve Blender development. We already have great new tools, but for a real open source VFX pipeline we need a lot more!

Here are the main categories for development targets we like to work on the next 6 months (in random order):

- Camera and motion tracking

- Photo-realistic rendering – Cycles

- Compositing

- Masking

- Green screen keying

- Color pipeline and Grading tools

- Fire/smoke/volumetrics & explosions

- Fix the Blender deps-graph

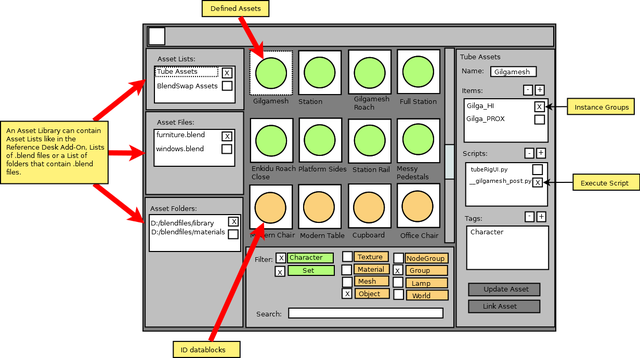

- Asset management / Library linking

How far we can bring everything is quite unknown as usual, typically the deadline stress will take over at some moment – forcing developers to just work on what’s essential – and not on what’s nice to have or had been planned. Getting more sponsors and donations will definitely help though! :)

Below is per category notes that have been gathered by me during the VFX roundtable at Blender Conference 2011, and in discussions with other artists like Francois Tarlier, Troy Sobotka, Francesco Paglia, Bartek Skorupa and many others, and some of my own favorites.

I have to warn you. This post is looong. :)

Camera and Motion Tracking

Even though the tracker is aleady totally usable, including object tracking and auto-refinement, there can be some improvements too.

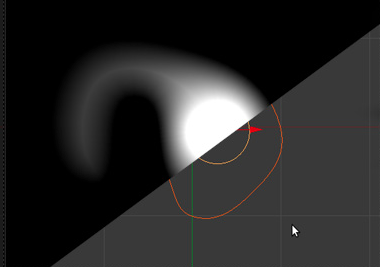

One major feature that we are waiting for to be included is planar tracking. Some of you might know Mocha, a planar tracker widely used in the industry, with which you can do fast and easy masking, digital makeup, patching etc. In a lot of situations you don’t really need a full-fledged 3d track just to manipulate certain areas of your footage. All you need is a tracker that can take into account rotation, translation and scale of the tracked feature in 2d space, for example in order to generate a mask that automatically follows the movements and transformations of the side of a car as it drives by.

Keir Mierle has something in the works that would allow such workflows. Obviously that would be tremendously helpful for masking and rotoscoping as well.

Another thing that will be important for tracking in Mango is the use of survey data. That means that the user can take measurements on set, for example the size of objects, the distance of features, the height of the camera etc., and feed these informations into the solver. That way not only the solution can be improved, but you can also constrain the solutions to certain requirements. Most likely in a production like Mango there are different shots of the same scene, with the same set, but from different cameara angles. As a matchmover you have to make sure that the different cameras adjust to that scene so that you can easily use the same 3d data for it. Being able to set certain known constraints for the solution can make that process much easier.

There are a few other things I would like to see in the tracker, but these are mainly smaller things like marker influence control and usability improvements like visual track-quality feedback and marker management that can probably be sorted out in a few minutes of some coders free time. Yes, Sergey, that’s you! :)

Photo-realistic rendering – Cycles

Just as important as tracking of course is rendering. The plan is to fully harness the insane Global Illumination rendering power of Brecht’s render-miracle Cycles. With that in our toolset we can create and destroy Amsterdam as photorealistic as it can get.

Still there are some things we need.

For example we need a way efficiently create and use HDR light maps, not only for realistic lighting, but also for correct environment reflections.

Another thing that will have to be solved is noise.

Even though Cycles is already incredibly fast there is always room for improvements.

Besides the pure render-performance one thing that is critical for Mango are good render-passes. Not only the passes that are needed to re-combine the image from the separate render elements, but to extract render data that we need to composite the rendering on top of the footage.

Two of the most common passes for that are lamp-shadows and ambient occlusion. Despite not being really physically accurate, they provide a fast an easy way to integrate objects into the live action plate. Often just the contact shadow together with the rendered object and a little bit of color grading is enough to create the illusion of the object being part of the footage.

So that’s the quick’n dirty way of doing it. But Brecht already suggested that he might find ways to do it much more elegant, by extracting the possible light and shadow contribution of cycles lightpaths to the live action scene. Personally I have no idea how he will do that, but it sounds awesome! Maybe by even using the footage, camera-mapped to textures, thereby being 100% realistic? In any case, I am looking forward to things to come!

In addition to the passes it should be possible to have the movieclip playing back in the viewport, while at the same time having the cycles render-preview running, ideally with a live shadow-pass being calculated. Think of it! Realtime photorealistic viewport compositing!

:)

Compositing

Masking

Related to compositing and also one of the most important features for any VFX-work is the ability to do quick and efficient masking. The current system of 3d curves on different render-layers with different objectIDs has to be replaced with something more accessible.

There is quite a lot of work to be done, and workflows have to be tested how to make a userfriendly, managable, efficient UI for masks.

Blender’s mask system should allow quick and rough masking for color grading as well as detailed, animated and even tracked rotoscoping without the hassle of going through a render-layer. There are some good ideas to make masks and mask-editing available in various places, not only in the Compositor but also in the Image Editor, Movie Clip Editor and Video Sequence Editor. Masking should be as accessible, dynamic and powerful as possible. Being a VFX shortfilm Mango will most likely be a masking orgy!

Luckily Sergey Sharybin is already on that task, supported by Pete Larabell, who also coded the Double Edge Mask.

Sergey has created a wiki development page that sums up the top level design for what is planned: http://wiki.blender.org/index.php/User:Nazg-gul/MaskEditor. And if you remember how fast and awesome the camera tracking module has been coded by him, you can be sure that masking in Blender will be great!

Green Screen Keying

Keying has to be improved, that’s for sure. The channel-key is pretty nice already, but the other keyers can go right into the trashcan. Color-Key and Chroma-Key are just plain unusable for any serious keying. But since Mango will be filmed mostly in front of greenscreen, with certainly different lighting conditions, different camera settings etc. it must be possible to exactly pick the keying color, not rely exclusively on the pure green channel. What and how this will be achieved is a bit unsure still, but Pete Larabell, who also works on masking, will look into that.

Color pipeline and grading tools

Fire/smoke/volumetrics & explosions

Doing Mango without a good amount of kick-ass destructions, dust, debris and detonations is probably not an option.

Blender does have nice smoke, particles and rigid-bodies, but so far these simulations mostly work best in a secure test-environment and are not interacting with each other. Controlling these effects can sometimes be a nerve-wrecking and tedious experience. Lukas Toenne is doing great work for the node-particles which should make much more things possible than what we can do with particles now. But to make smoke, fire, simulations and explosions really communicate and influence each other in an animated complex FX shot a lot more work has to be done!

Also the the setup of these effects can be streamlined. Fracture shards setup for example is still a bit clunky. We need to find a way to easily control and tweak all the different parameters. This might also be a good time to finally move rigid-body-simulation from Game-Engine into Blender and make it a modifier!

Fix the Blender deps-graph

http://wiki.blender.org/index.php/User:Ton/Depsgraph_2012

Asset Management

Wow, Can’t wait to see that in the trunk :D

Nice job guys!

That was a long read, but totally worth it!

Looking forward to it.

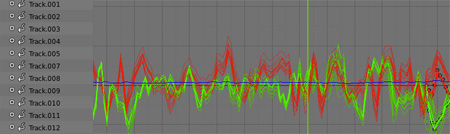

About the graph in general, i find it a thousand times harder to work on IPO curves in 2.5+ than in 2.49, for me its often a riddle which curve exactly is selected and tweaking in curve-edit mode also got harder, not 100% why.

Photo-realistic cycles *dream*, that would be soo awesome. Even with Cycles at its current stage the possible results are already mind-blowing and i’m sure a lot of people from the 3d industry are watching attentivly what way cycles will go.

Keep up the good work and a thanks to all you blender devs.

YA!!!! Fire/smoke/volumetrics & explosions improvements, finally. THANK YOU.

ikr :D best news since cycles ^_^

That all sounds extremely exciting. The asset management would be extremely welcome. I’m starting to use Blender for video editing, because all other open-source programs are buggy and awful, and frankly so are most commercial consumer programs. An asset manager and color grading would make it super easy to do humble editing projects like sticking some homevideos together.

Sebastian, you closed all my open questions :)

Im very lucky that someone like you is in the Mango-Boat!

Wow, all these improvements. Can’t choose between it. All needed features are really great. I think personally Color management will be the best shot for ME. overall If I was a coder what a pleasure to join. Go guys

DigiDio

Put EDL support into the VSE, so you can let the REDCODE to EXR converter render only the frames you need, as you very often only need 4 seconds of a 30-second take.

After shooting on set you convert the entire takes to an ‘offline format’, like motion-jpeg. After the rough cut you use an EDL to render out the EXR frames you need and use the workflow you like.

Good grief this is exciting!

Yes! Compositor and Masking sound awesome especially being able to move footage within canvas and link it to tracking data, these things are simple task like in after effects and are a must for vfx work. sounds like you have blenders vfx shortcomings well pegged down. sweet . and defiantly move rigid body to blender .

what a thorough & great writeup on the mango targets! I hope many(if not all) of these make it into trunk. I’ve already purchased my DVD and made an extra donation! Thank you BF & Mango Team!

I should also note, that personally, the Depsgraph upgrade is SORELY needed. There are so many elements in Blender’s core that imo are hindered by the current depsgraph. Amongst them that, for my workflow, is most apparent, when compared to other 3d suites, is animation. Whether its with python run constraints, or most especially, the update speed of an even moderately complex rig, that other animation suites can run at a very high speeds(comparatively), something as simple as threading the depsgraph would do wonders for many Blender users, and those suffering from the limitations of the current(but still very “good”) depsgraph.

“it will be possible to move footage around freely on the compositing canvas,” OMG…this is truly awesome

“But to make smoke, fire, simulations and explosions really communicate and influence each other” — that’s gonna be a huge task. if current system fails, the devs should take a look at integrating physBAM which can already simulate smoke,fluid,rigid&softbody in the same domain.

http://physbam.stanford.edu/

That is a great library and used bythe industries best

http://www.siggraph.org/s2011/content/physbam-physically-based-simulation-0

But what license is it under? It says that the source can be downloaded and used as long as the copyright notice is included, but does that satisfy the licenses blender is run under?

http://physbam.stanford.edu/links/backhistdisclaimcopy.html

But what a great system they have there. dare I say, too big maybe…

I found on Ogre site that it is a MIT license. Is that compatible with blenders licenses? Even if so I can see a lot of work needed to have it implemented and in good working condition for Mango, given the huge task of having the Depsgraph also being in need of much love and attention. But who knows, given the need for blockbuster style simulations and effects, the devs may see need for it… It may even be a valuable addition to the code base, for our summer of code students to build upon.

Other peoples takes on it.

http://www.blendernation.com/2011/03/25/physbam-has-been-released/

http://www.ogre3d.org/forums/viewtopic.php?f=1&t=64379

Back on topic…

I think that this work being considered is amazing from the sheer size of the projects along with the implications it could have for those who want to invest in Professional grade video editors and compositors but who just don’t have the funds… Great stuff indeed.

MIT is a compatible license :)

Blender, its organisation and its developers are just amazing. I just love it. The list is impressive and needs some passionate developers and minds to tackle those tools … but so far that really didn’t seem to be a problem.

I mean – it’s a free tool, capable of so many things and connecting people to the idea of freedom <– that's like…the only way to go.

So thanks to all (the devs, the 'ton', the artists, the helpers and the supporters promoting this tool). I guess I am having one of those emotional, motivated situations ;)

Great article and glad to see these topics finally published! That photo up there brought some nice memories, here’s another one with you Sebastian too. :) http://views.fm/hhoffren/b186a1/lightbox?path=/IMG_4134.JPG

Simply awesome :)

I just had an idea. Ton mentioned in the production update post that the movie could be done in stereoscopic 3D. But that it would require additional funds. So I wonder if it would be possible to instead shoot it in 2D and convert it using Blender. I’m sure it wouldn’t be too complicated to create a node similar to vector blur that uses 3D data to distort a 2D image and so you could create basic models of all the live-action elements and animate them with the rotoscoping tools.

I really miss a GUI/ handling of the video sequence editor that is as fluent to use as other editors )like kdenlive etc.

Are you kidding me? I really dislike kdenlive. I mean, I’m happy it’s there, but the interface is so finicky. Changing the parameters of fade in/outs and such feels like blackmagic to me. If only kdenlive could be more like blender.

Wow, this is al amazing stuff. Not sure how useful this is but here’s a link to a script I have written that matches locked of cameras in Blender. It uses a measured base line and some other user data to find the location.

http://blenderartists.org/forum/showthread.php?244624-Locked-of-Camera-Placer-(possibly-usable-with-pans)&p=2041760#post2041760

Thanks

Tom

I feel like I have been waiting for those 6 next month my entire Blender Life ^^

I’m totally stunned! :)

Not that I’m not grateful or anything but what about the VSE? IMHO it is in urgent need of some serious care from the devs! When are the nodes gonna be integrated in the sequencer? To apply even the simplest filter we need to render twice. To do color grading we also need to render twice. Maybe these are not production priorities but I feel that Mango is our last hope to see any needed developments in the VSE…

Looking good!

Have you considered/discussed how to adress the rolling shutter issue (which is very real, even on the RED epic)?

Looks like a big list of features that are on their way!

The only other thing that is missing from the camera tracker in my opinion, is being able to handle focal length changes

An amazing list to be sure, hope that most of that makes the cut!

Even thought there are much sexier features on the list, the most important imo is a proper ram caching system for the compositor. Also massively looking forward to particle nodes and open cl acceleration.

On the masking side, I would love to see the introduction of some procedural spline nodes (elipse, box, polygon, text, etc) that could be used for masking and general motion graphics tasks.

VSE love would be nice but I don’t suppose it is essential for Mango? I hope we can see ffmpeg 0.10 make it into trunk though, timecode and 10bit codecs would be sweet indeed (along with changes to color space for VSE).

Amazing work guys, this will be a huge update for Blender, I hope I’ll see it soon in the trunk. I have a small idea for blender. could we get an option to load a scene but with the default window organization/pattern ? (I’m thinking of loading 2.4x .blends that have the old horizontal layer)

Hi Antoine. There is already a “Load UI” checkbox when opening blender scenes. Just check that off and the file should open without any layout changes.

Oo 3 years I’m using Blender and I never saw it … I’m SOOOO confused xD

Thanks a lot Kjartan !

I noticed that we haven’t seen any new Sponsors lately. How’s the situation?

> Green Screen Keying

I’m a bit puzzled regarding the statements here.

In fact, all keying nodes in blender are needed for serious

keying and have their places within the keying workflow.

The chroma key won’t do pretty nice etches, but is

a very good tool for pulling automatic garbage mattes e.g.

You might want to take a look into the book

“Digital Compositing for Film and Video” by Steve Wright

before you accidently throw out nodes, that are usefull,

but not easy to understand at first :)

And BTW: he also explains how to pull mattes from not so

clean backgrounds, so in a nutshell:

* most of the tools are already there

* you might want to learn how to use them

One could add some tools on top of the available ones, that

try to make the keying process easier to use, though.

But throwing out usefull nodes isn’t the way to go, I think.

Just my 2 Euro Cent

Peter

Maybe that statement was a bit harsh. They do have their place in the workflow, but the edges they produce are just a nightmare to work with. Of course nobody is going to kick them out, but we do need serious improvements for keying.

That’s really a huge amount of work. Really appreciate and love the developer.

I agreed that the VSE could need some love. Right now it really is a weaker part in Blender.

I’ll just second Peter’s comments, while I agree that some new keying nodes would be welcome, and if there are fundamental issues with the current ones (I haven’t came across too many issues) then perhaps they could be worked on, or replaced.

But yes, using a node set-up is far, far superior in my experience. You can work with the RGB channels and do math operations, chain together set-ups and mix together all kinds of really cool things to pull off some very impressive keys.

I remember a while back trying the keyers in Blender on some well shot green screen footage and they keyed pretty well, the hair was a little tricky to get right and I wasn’t too pleased with it, moving to a manual keying set-up really made the key shine though, very fine hair detail was evident and overall it just looked better and more refined, granted the initial set-up took a little longer, but I could then group this node and use it as a pipeline wide asset for compositing.

Perhaps something like that could be done for Mango, i.e. a way of having the asset manager handle things like node pre-sets with information on them (a text datablock stored with the node, or linked to the node) and the ability to easily manage massive node pre-set libraries, then all of the pre-sets made with Mango could ship with the official Blender and a repository of pre-sets could be created too.

Overall there really isn’t much you CAN’T do with the nodes available in Blender, after all most of the compositing nodes in Nuke, or the tools in AfterEffects are, after all, a simple chaining together of very simple operations.

Perhaps having pre-sets able to define the a set of parameters displayed in the properties panel with custom text headers would allow for rapid creation of new node tools.

Anyway I’m just thinking aloud; I’m really, really excited about all of the possibilities that Mango is going to bring! :)

P.s. this may be out side of the scope of Mango, but having tools to bridge the gap between the 3D viewport and the compositing window would be incredible, after all we have two massively powerful editors, together they would allow artists to create some truly awesome work flows… Imagine having the ability to put out a depth pass, and beauty pass to the 3D viewport as a point cloud and light it with the standard Blender lamps, or to put out a 2.5D point could and integrate it with dust clouds as a character walks over dusty ground, or water splashes as they run over wet ground.

Granted there still are a lot of fundamental issues to resolve, but planning for the future (and I really think 3D compositing is the future) is never a bad thing.

P.s.s. Having the ability to create a point cloud from a depth pass would also allow for stereoscopic work flows, smoke, fire, explosions and so on are required to be rendered twice, using the initial set-up (voxels), this would double the render time, yet if it were possible to render out from one camera (a beauty & depth pass and so on) then simply set up two cameras and create a 2.5D point cloud of the explosion in the 3D viewport, you could then very easily tweak and create the two images that would be required for compositing.

Blender could be more, i only know how to write python scripts but dont know how to use it for blender development, but to achieve all that has been laid out as regards to development, then more people have to be involved. So i encourage all blender developers all around the blender community to help.

..Me again.

I really don’t where to put this (I don’t think I can comment on the asset management blog), but having the ability to link in data and then set a placeholder in the 3D viewport would be very good; Basically you’d pull all of your scene together in a .blend file that links in the proxy’d production data, in the view port would be massively cut down models / textures / simulations, but when hitting render Blender would look at the data path set for the proxy object and load in (from disk) the required assets.

That way files stay very small, and all of the required data is on loaded into RAM only when required, else the data stays on the hard drive. This could be extended further for massive (MASSIVE!) pre-baked / cached dynamic simulations (rigid body) where animation is fed in via Alembic from the disk for each given frame (and previous / next for vectors).

Daniel Wray I like your thinking on 3d comping, I would love to see a smooth work flow from shooting 3d, tracking 3d, etc… but that is probably beyond the scope of this project

“For example we need a way efficiently create and use HDR light maps, not only for realistic lighting, but also for correct environment reflections.”

No matter what technique you use, allways add 2 Mirror Ball shots:

Its the best most foolproof and fastest method. (Especially if you do want to keep the time between shot and enviroment shot as short as possible, to keep lightning conditions as close as possible).

So even if you decide to use a different technique (with better quality results), I still recomend it to use it as a backup if things go wrong.

Editing:

Good luck on this: Basically it would mean, fixing a lot of cinepaint, or (even better) pushing blenders paint engine.

Last time I’ve checked, even hugin was a bit picky (pecky ?) on hdr output / merging. ANd keeping the 5k res in mind, this IS very ambitious.

The first thing yo do imho : NO WALL BETWEEN VSE, VCE (video clip editor)and COMPOSITOR.

Definitely move rigid bodies into a Blender modifier! Sounds great guys!

With respect to Fracture and destruction.. what about Voronoi shatter by Phymec?

http://www.youtube.com/watch?v=FIPu9_OGFgc

http://www.youtube.com/watch?v=0_qVjLGuT6E

I think the compositor needs noise and denoise nodes. Matching noise and grain between shots from different sources is essential for realistic composites. There might be workarounds for adding noise, but there really isn’t any solution for good temporal denoising.

I am so happy that Blender has created a project like this! To see what Blender now offers and what could be seen in future versions gives hope for many filmmakers on a tight budget =]

i think i don’t using another software :lol

respect!

Any news about survey data?